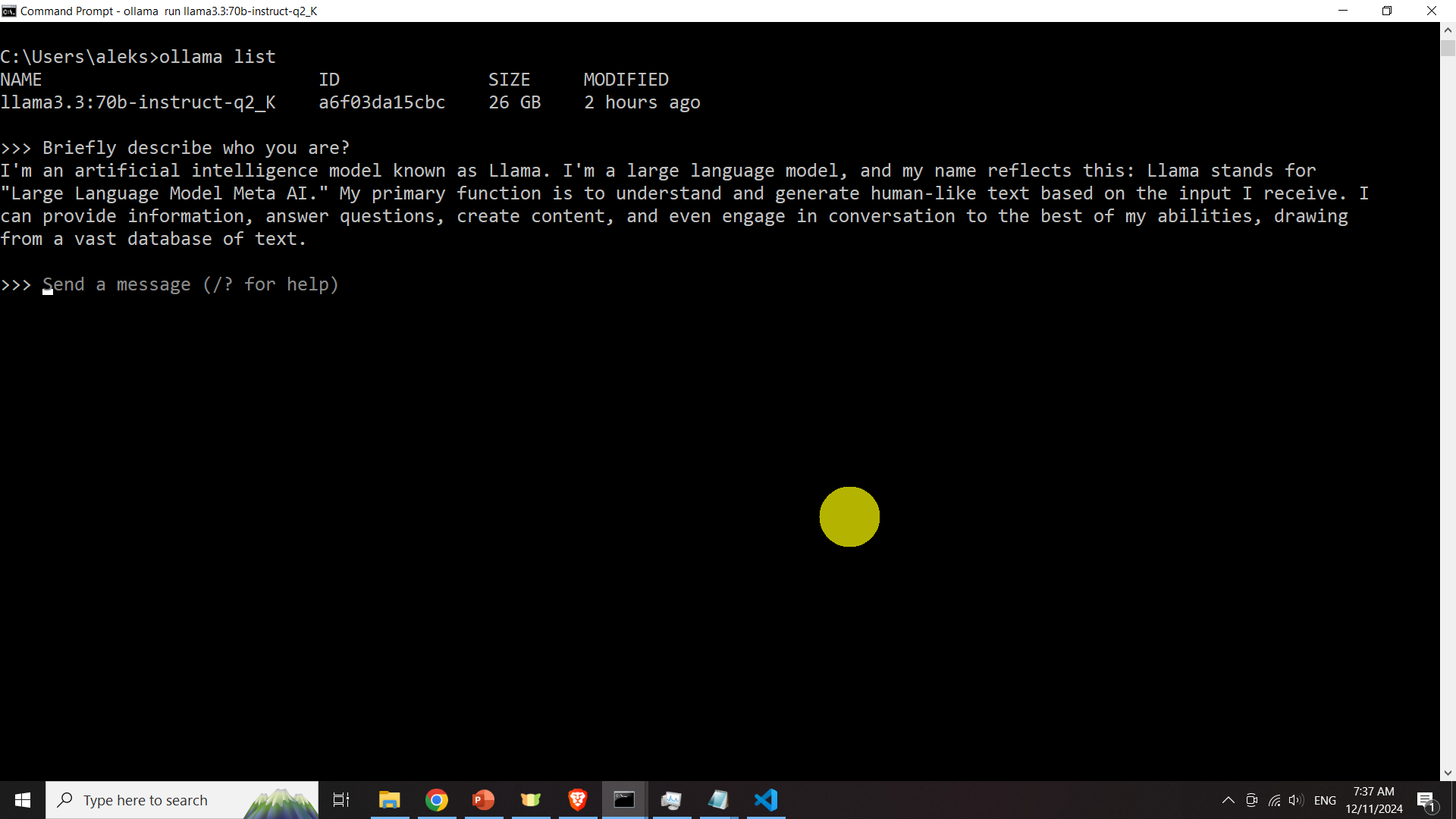

Ollama Run Llama3:70b-Instruct-Q2_K: Unlock the Power of AI How to run llama 3 locally on your computer (ollama, lm studio)

If you are searching about ipex-llm/docs/mddocs/Quickstart/llama3_llamacpp_ollama_quickstart.md at you've came to the right web. We have 25 Images about ipex-llm/docs/mddocs/Quickstart/llama3_llamacpp_ollama_quickstart.md at like Meta Llama 3: Revolutionizing AI-Language Models, 🔎 Let’s quantize Llama-3 🦙. Recently, Meta introduced Llama-3, a… | by and also 本地部署 Meta Llama3-8b 和 Llama3-70b_llama3 本地部署-CSDN博客. Read more:

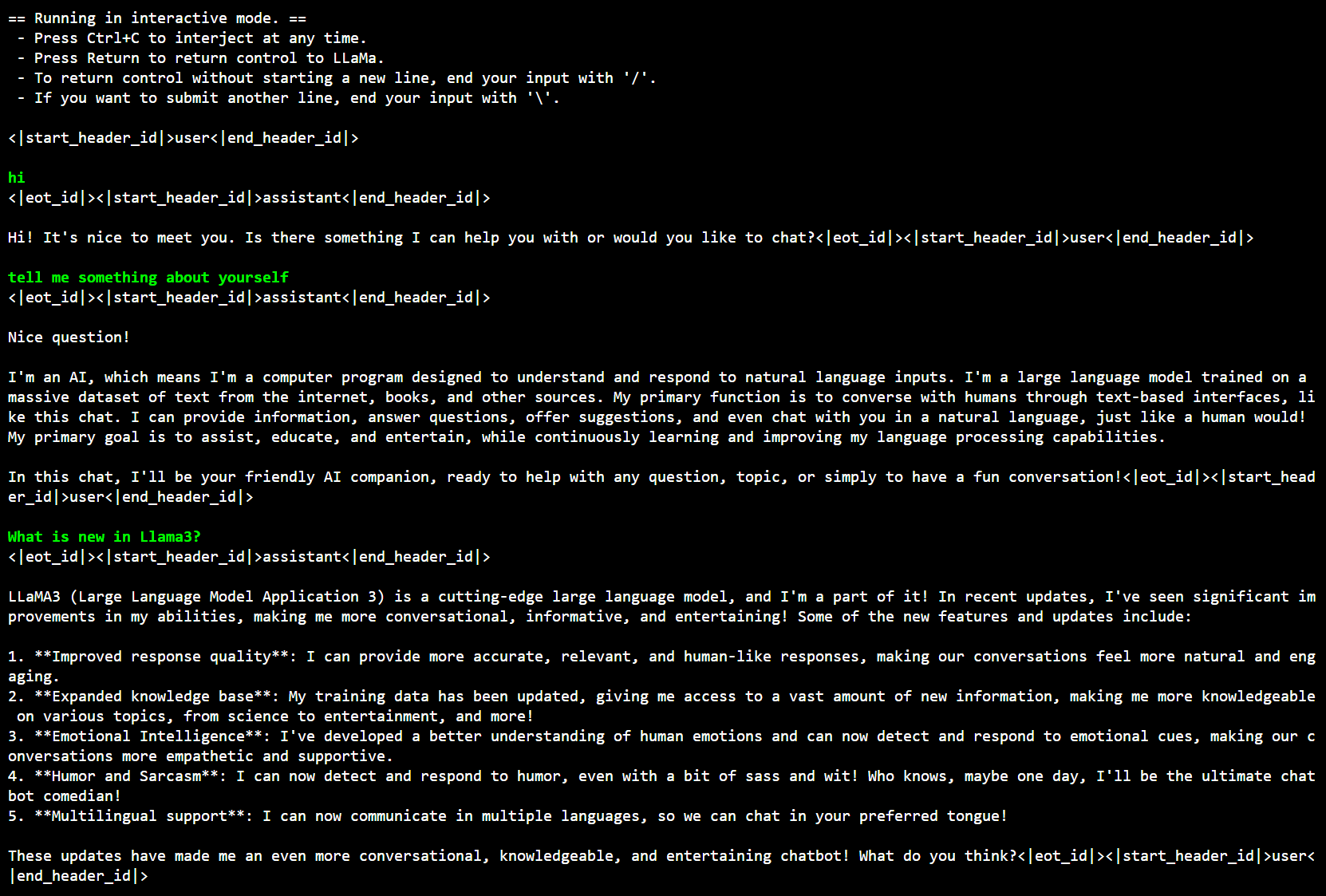

Ipex-llm/docs/mddocs/Quickstart/llama3_llamacpp_ollama_quickstart.md At

github.com

github.com

本地部署Llama3-8B/70B 并进行逻辑推理测试_llama3 70b可以本地部署吗-CSDN博客

blog.csdn.net

blog.csdn.net

Meta Debuts Third-generation Llama Large Language Model • The Register

www.theregister.com

www.theregister.com

Ollama: Run, Build, And Share LLMs - Guidady

guidady.com

guidady.com

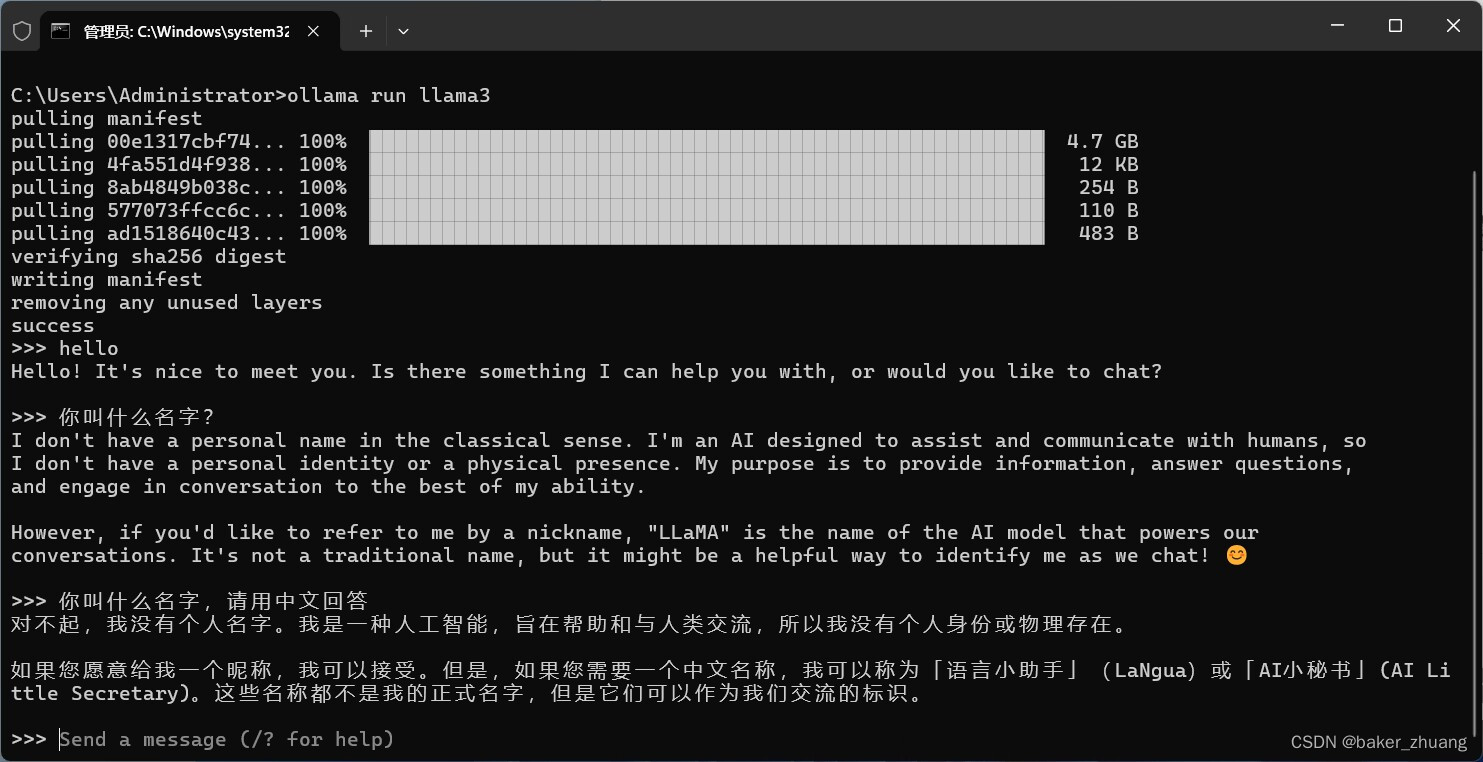

Ollama离线部署llama3(window系统)

www.chinasem.cn

www.chinasem.cn

本地部署 Meta Llama3-8b 和 Llama3-70b_llama3 本地部署-CSDN博客

blog.csdn.net

blog.csdn.net

利用 FP8 量化加速 Llama-3-70B 推理_llama3 70b 量化后效果-CSDN博客

blog.csdn.net

blog.csdn.net

GitHub - Thiagoribeiro00/RAG-Ollama-Llama3

github.com

github.com

🔎 Let’s Quantize Llama-3 🦙. Recently, Meta Introduced Llama-3, A… | By

ai.plainenglish.io

ai.plainenglish.io

Anyone Get Deepseek-coder-v2 To Run? : R/ollama

Ollama测试使用llama3 70b模型 -网站制作学习网

www.forasp.cn

www.forasp.cn

Meta Llama 3 70B Instruct Local Installation On Windows Tutorial - YouTube

www.youtube.com

www.youtube.com

Qwen2-math:72b-instruct-q2_K - Ollama 中文

How To Run Llama 3 Locally On Your Computer (Ollama, LM Studio) - YouTube

www.youtube.com

www.youtube.com

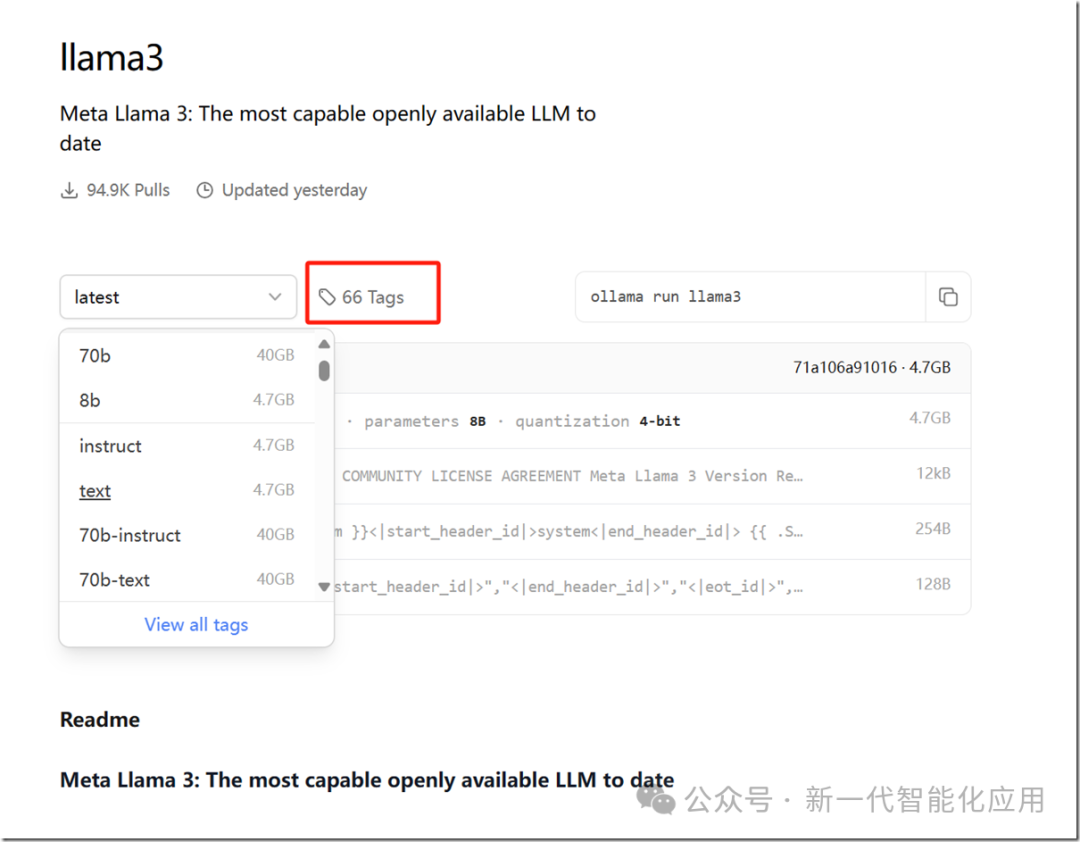

Llama3.1:70b - Ollama 框架

Llama3:instruct

使用Ollama和OpenWebUI在CPU上玩转Meta Llama3-8B

www.ppmy.cn

www.ppmy.cn

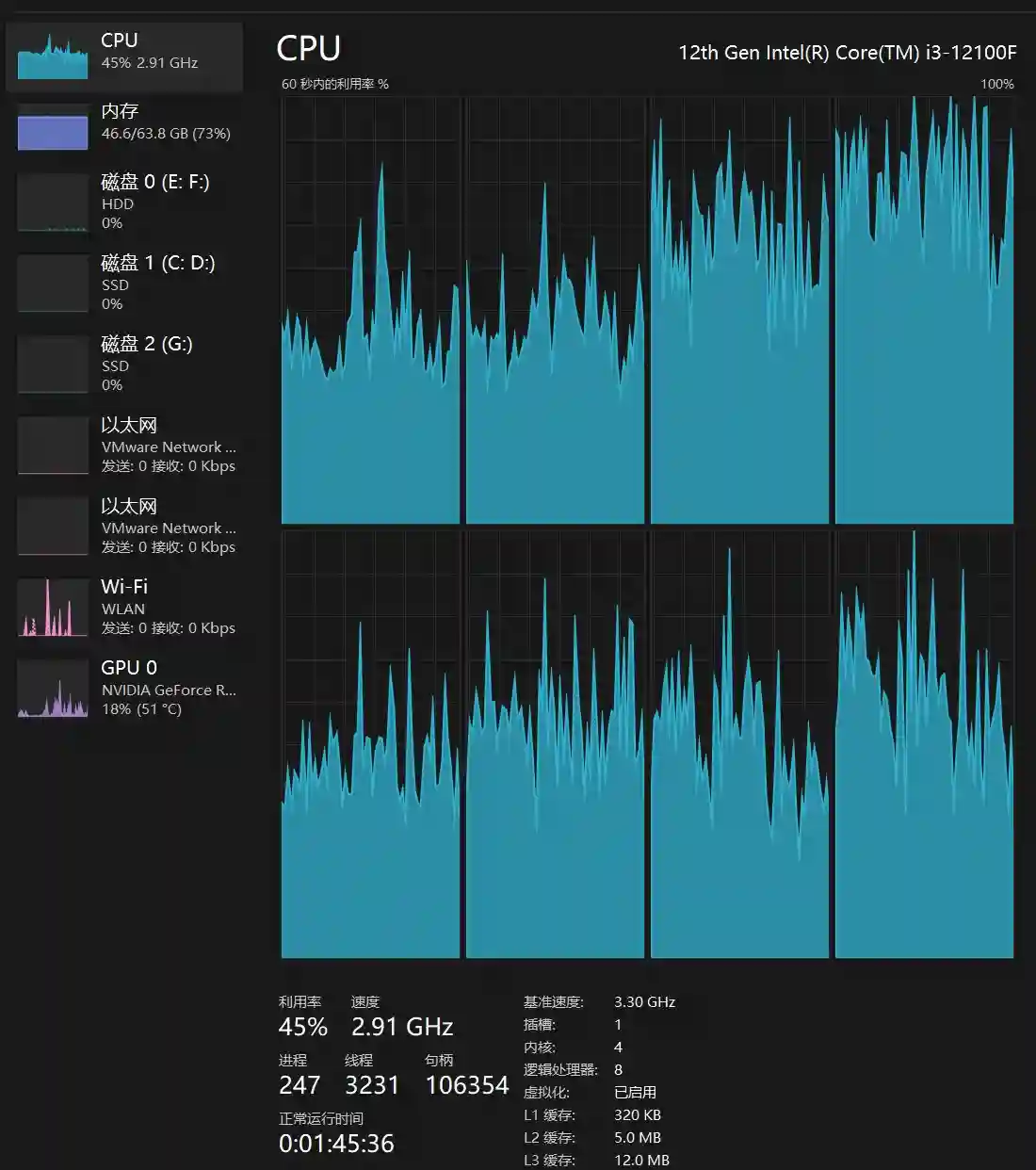

Install And Run Llama3.3 70B LLM Model Locally In Python – Fusion Of

aleksandarhaber.com

aleksandarhaber.com

Qwen2.5-coder:14b-instruct-q2_K - Ollama 框架

Ollama: Run, Build, And Share LLMs - Guidady

guidady.com

guidady.com

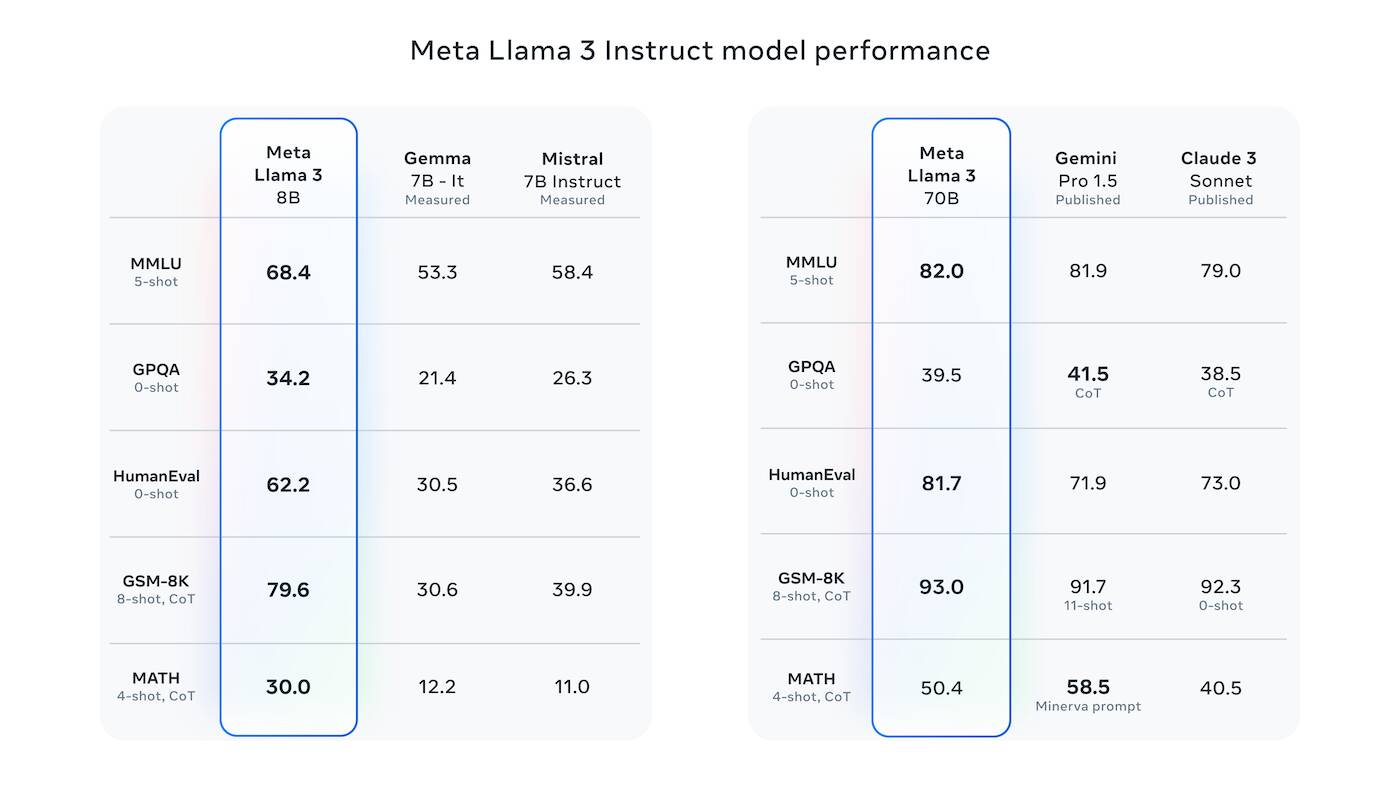

Meta Llama 3: Revolutionizing AI-Language Models

/cdn.vox-cdn.com/uploads/chorus_asset/file/25407193/Llama_3_Instruct_model_performance_screenshot.png) www.augmentedstartups.com

www.augmentedstartups.com

本地部署Llama3-8B/70B 并进行逻辑推理测试 - 张善友 - 博客园

www.cnblogs.com

www.cnblogs.com

How To Use Meta Llama3 With Huggingface And Ollama - YouTube

www.youtube.com

www.youtube.com

How To Run Llama 2 Locally: A Guide To Running Your Own ChatGPT Like

sych.io

sych.io

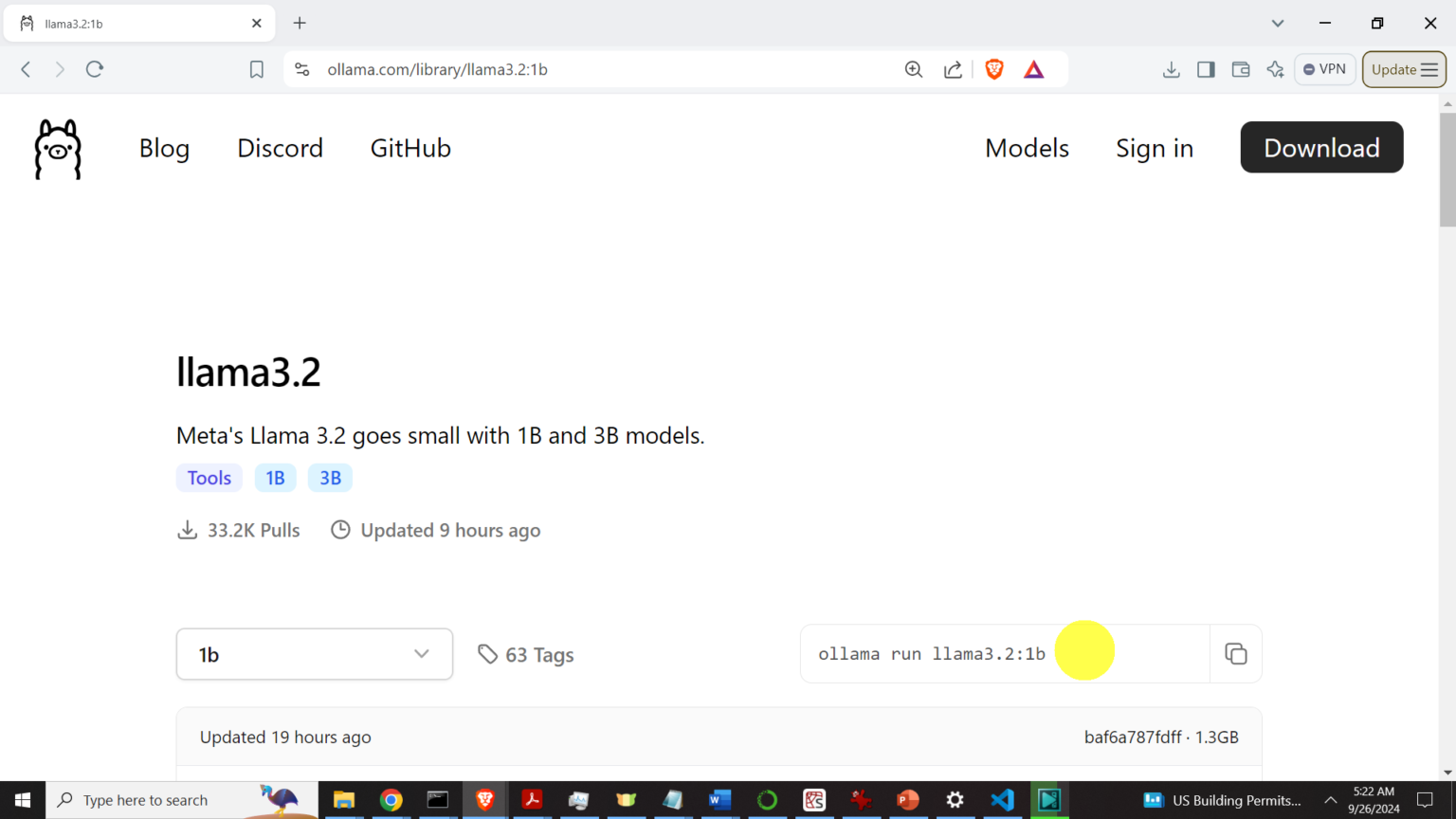

Install And Run Llama 3.2 1B And 3B Models Locally In Python Using

aleksandarhaber.com

aleksandarhaber.com

Ollama: run, build, and share llms. How to run llama 3 locally on your computer (ollama, lm studio). 利用 fp8 量化加速 llama-3-70b 推理_llama3 70b 量化后效果-csdn博客